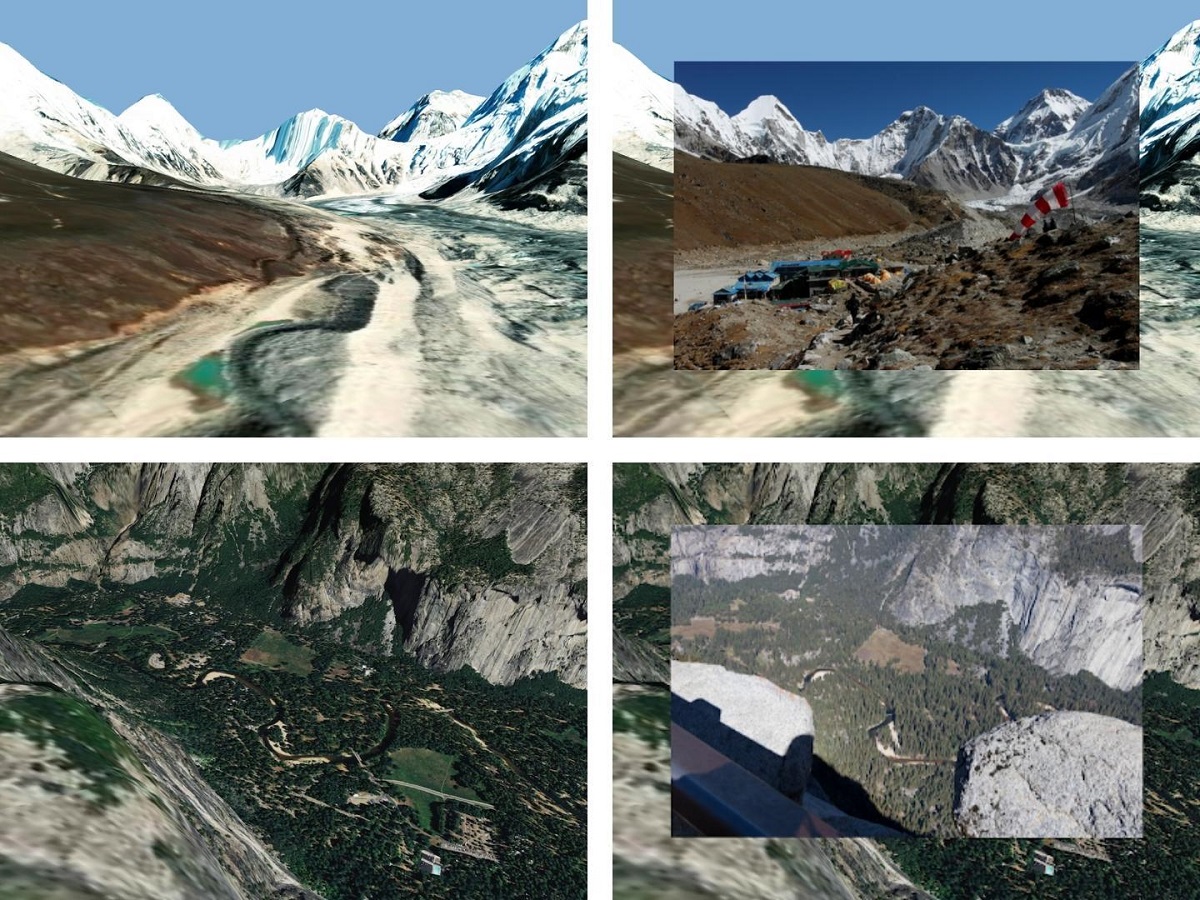

When a camera is pointed at the landscape, the new software detects significant points outlined in the image and cross-references them against a database of thousands of images and 3D terrain models. These visual indicators and GPS coordinates are used to reveal information about the surroundings, such as the height of hills, contours, hiking trails and distances between locations. Photo Credit: Freepik / Illustrative Photo.

Brno, Feb 7 (BD) – Researchers from the computer photography research group of the Faculty of Information Technology (FIT) at Brno University of Technology (BUT) have developed a new software tool in cooperation with Adobe Research. The new tool was introduced at the ECCV conference and is now awaiting a patent.

When mobile phones users point their cameras at the landscape, the augmented reality (AR) tool shows users the names and height of hills in the area, as well as hiking trails. Users can also capture the scene by taking a photo and make various edits to the photo, such as sharpening the image.

“Our software can refine the position and orientation of the camera in the outdoor environment. In the mobile application, it will provide various information about the surroundings using AR – river and mountain names, contours or distance to the mountain hut – it can simply display any topographical information in real terrain,” explained the Head of the CPhoto@FIT research group, Martin Čadík.

The tool uses the GPS positioning of the mobile phone and indicators from the photo to display a synthetic view of the landscape. It detects significant points in the landscape on the screen, such as the shapes of hills, rivers and forests, then compares this information with 3D terrain models to accurately determine the position and orientation of the camera to within metres. The researchers used thousands of landscape photographs from their archives and images from the internet to create a neural network which lets the tool function automatically.

“We used to use these images to compare individual points, so we compared photos with photos. But there were a number of disadvantages – we couldn’t locate them from where we didn’t have images. We now compare photos directly with 3D terrain models. They cover the whole planet, therefore, the places where people do not go also contain data with textures from different seasons, which helps to locate if the landscape changes,” said Čadík.

Users can also take advantage of the tool at home on the computer. The software records the exact location and orientation of the camera when the photo was taken, allowing users to edit the image later and also return to that location in virtual reality. Using virtual reality glasses, the photographer can show others what the surroundings looked like at the place where they took the photo. This software is considered a major step in the advancement of computational photography and was achieved thanks to the development of neural networks and the accurate field models with textures at hand.

It was developed under a project of the Czech Ministry of Education, Youth and Sports: ‘‘Topographic image analysis using deep learning methods’’. The research team from FIT plan to extend the project through further research and determine the location and orientation of the camera on a wider scale using a neural network and terrain models, but without the GPS position.